Machine Learning and Physics

Profs. Tristan Bereau and Fred Hamprecht

Machine learning has become a transformational force in our society, and is profoundly impacting society in ways both good and bad. On the good side, machine learning fuels scientific breakthroughs such as solving the protein folding problem, or creating large language models that by now show sparks of artificial general intelligence. On the bad side, convincing deep fakes are used for manipulation, and machine learning supports surveillance in totalitarian states.

Contents

This course takes a two-pronged approach:

Physics of Machine Learning: Highlight physical ideas and concepts that drive ML

Machine Learning for Physics: Equip you with tools to help conduct, and interpret, future experiments

Curriculum

- Introduction & linear dimension reduction

- Nonlinear dimension reduction: connection to statistical mechanics; UMAP

- Nonparametric density estimation (KDE, RV, expectation), mean shift

- Linear regression

- Regularized regression: ridge, lasso

- Statistical decision theory, Classification

- Parametric & generative methods (QDA). CART.

- Cross-validation, double descent

- Logistic regression, generalized linear models, softmax

- Multi-layer perceptrons

- Training of neural networks. Batchnorm

- SGD with momentum. ADAM. Backpropagation

- Convolutional neural networks, inductive bias. CNNs, Self-supervision

- Auto-encoders, relation to PCA, parametric UMAP, sparse AE

- vAE incl. ELBO, link to free energy

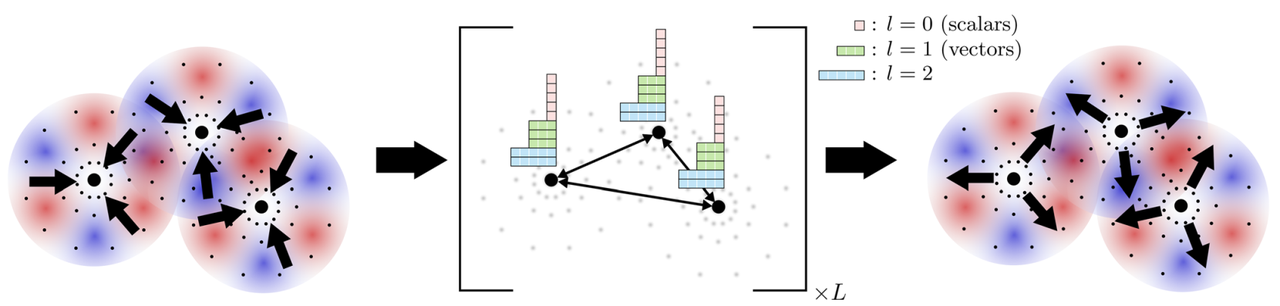

- Graph neural networks (GNN)

- Attention, transformers

- Large language models

- Sampling, EBMs

- Ethics of AI, AI Safety

- Flow based methods: normalizing flows, flow matching, diffusion models

- Flow-based methods part 2

- Continuous diffusion models

- Equivariant ML with kernels: symmetries, groups, representations

- ML in AMO physics

- Q&A

Where and when

The course starts with a python refresher in the tutorial on Oct 13th or 14th (identical content). Unless you are familiar with python and its basic scientific stack (jupyter, numpy, matplotlib, scipy), please take part to help you solve the computational exercises.

The main lectures are on Tuesdays and Thursdays from 9h00 until 10h45 in Großer Hörsaal, Philosophenweg 12.

FAQ

Q: Do I need prior knowledge in machine learning?

A: No.

Q: I just want to learn the basics. Is this the right course?

A: The course has a steep learning curve and entails significant workload. If you only want to cover the basics, please check for slower-paced alternatives such as the “Machine Learning Essentials”.

Q: Is this course about deep learning?

A: Neural networks will play an important role; but this course is more about principles. While we will discuss architectural elements such as transformers, this course is not a detailed review of the latest architectures.

Q: Will this course be repeated next year?

A: Yes, like every MSc core course.

Q: Is there a text book?

A: The course does not follow any single textbook, but the following are good sources: Hastie, Tibshirani, Friedman: The Elements of Statistical Learning and Prince: Understanding Deep Learning.

Q: Exam modalities?

A: To be admitted to the written exam at the end of the semester, you need to gain 50% of the points in the exercise sheets. These are part computational, part pen-and-paper.